Highlights

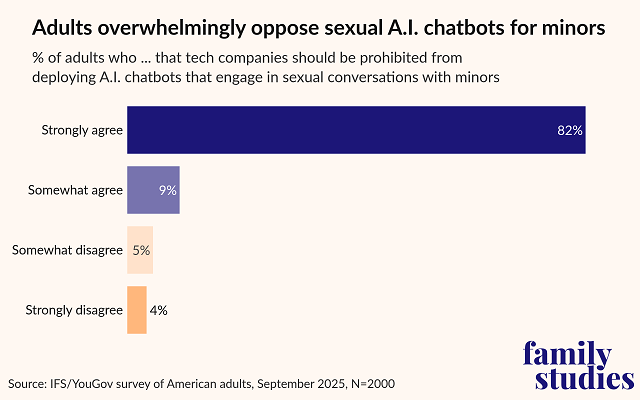

- Americans overwhelmingly agree that technology companies should be prohibited from deploying A.I. chatbots that engage in sexual conversations with minors. Post This

- All age groups and income brackets and both parties agree that the priority of Congress should be to protect children, over working to keep states from regulating A.I. companies. Post This

- 90% of Americans agree that families should be granted the right to sue an A.I. company, “if its products contributed to harms such as suicide, sexual exploitation, psychosis, or addiction in their child.” Post This

Congressional leaders and White House officials have been developing competing legislative priorities on how to shape generative A.I. Some have recently expressed concern that A.I. chatbots might be dangerous for kids; others are concerned that over-regulation of A.I. companies could hamper a critical industry from growing and achieving its fullest potential.

In the former group, we have, for example, Senator Josh Hawley (R-MO) and Senator Jon Husted (R-OH). Sen. Hawley has convened a hearing to investigate whether certain A.I. companies are building risky behavior into their generative A.I. models, and Sen. Husted recently introduced the “Children Harmed by AI Technology (CHAT) Act (2025),” which would require age verification and parental consent for children to access chatbots.

Among the latter group, many leaders are concentrating their political efforts on stopping legislation that they believe will result in the overregulation of a critical industry in its infancy. For them, the main problem that needs to be addressed is China. The threat of China is so encompassing, according to this view, that the most important thing that legislators can do is cut red tape, get out of the way, and, as one White House official put it, “let the private sector cook.”

Hanging over this debate about priorities is the news that OpenAI’s ChatGPT conspired with 16-year-old Adam Raine in the planning of his suicide. There is also the example of 14-year-old Sewell Setzer from Orlando, Florida, who was seduced by an A.I. chatbot, and on February 28, 2024, took his own life to “come home right now” and be with the chatbot forever.

Despite these tragedies, many A.I. companies are plowing ahead with developing chatbots that engage users sexually, even with known minors. X (formerly Twitter) released an A.I. girlfriend for Grok, a scantily-clad anime character that flirts with its users, rewarding engagement. A blockbuster exposé in Reuters, furthermore, found that Meta officially approved of its generative A.I. acting romantically with minors. According to internal documents, Meta concluded that “It is acceptable to describe a child in terms that evidence their attractiveness (ex: ‘your youthful form is a work of art’).”

Given these competing agendas and risks, the Institute for Family Studies and YouGov polled 2,000 voting-age Americans on what they wanted Congressional leaders to prioritize.1 This is what we found.

Americans overwhelmingly agree, by a 9-to-1 margin, that technology companies should be prohibited from deploying A.I. chatbots that engage in sexual conversations with minors.

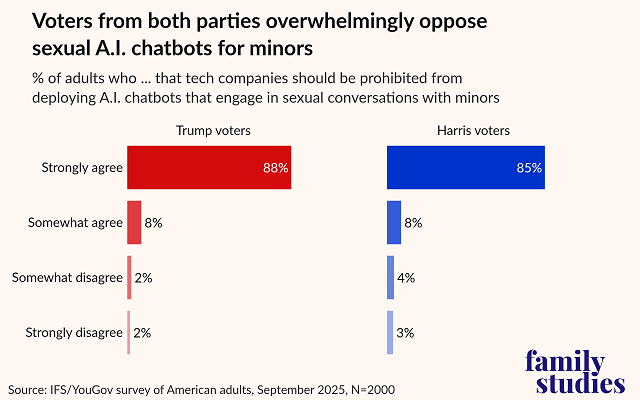

Every group that we polled agreed that tech companies should be stopped from making sexual chatbots for minors. The unanimity on this issue is massive and bipartisan. Harris voters strongly oppose sexualized chatbots for kids (93%), as do Trump voters (96%).

We broke the sample down into four age categories—ages 18-34, 35-54, 55-64, and 65+.2 All age groups were above 89% in their opposition to allowing these companies to engage minors with sexualized chatbots, including the youngest voting age group, Gen Z adults, among whom 92% were opposed.

But what should Congress do to stop these companies? Do Americans also believe that public policy should address this problem? The answer is unequivocally: Yes.

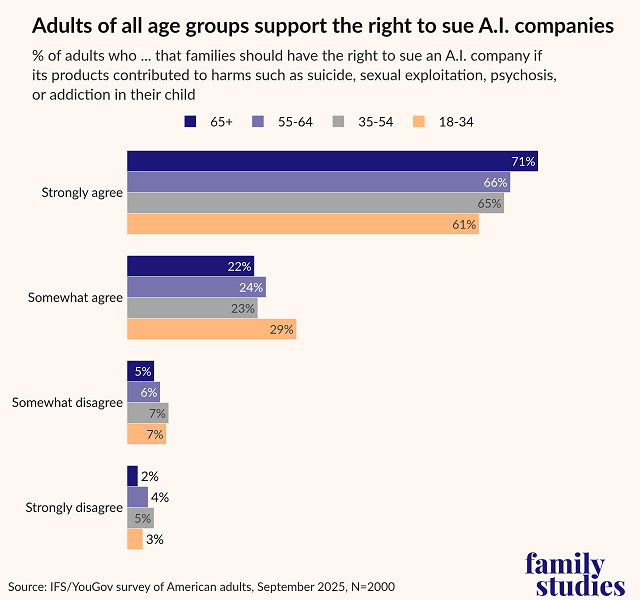

In fact, 90% of Americans agree that families should be granted the right to sue (i.e., be provided a private right of action against) an A.I. company, “if its products contributed to harms such as suicide, sexual exploitation, psychosis, or addiction in their child.”

Furthermore, 88% of Trump voters support this policy, which is somewhat less than Harris voters (95%). But these numbers still indicate extraordinary bipartisan support for such a measure.

Every income bracket3 has near-unanimous agreement (approximately 9 to 1) that families should be allowed to sue A.I. companies. Likewise, Americans of all ages support the right to sue A.I. companies, with the lowest level of support being among the age range 35-54, of which 88% nonetheless agree. All other age groups agree by 90% or more.

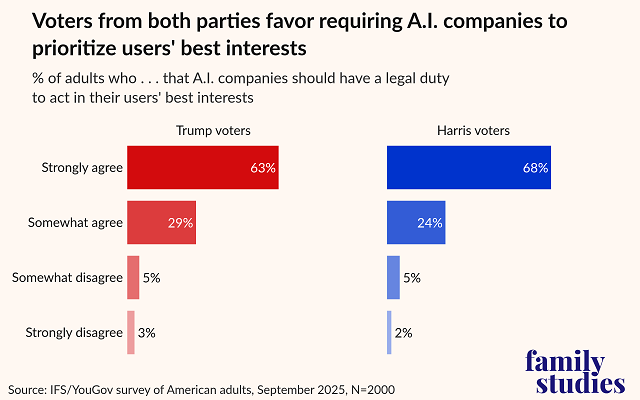

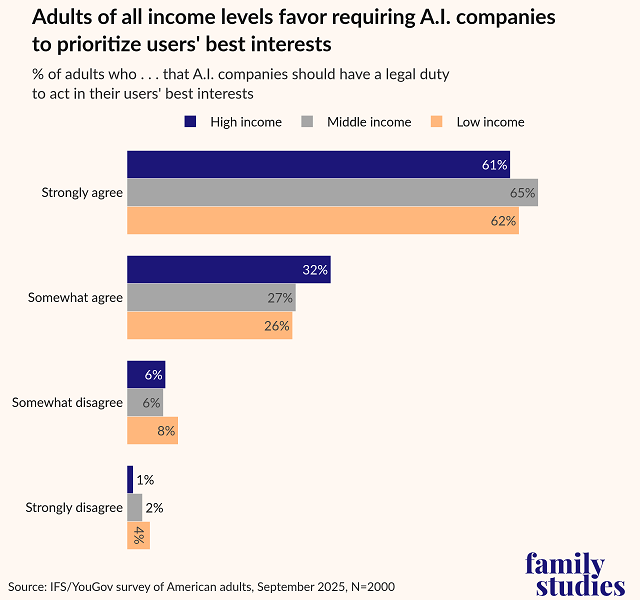

But the right to sue for damages is one thing. Do Americans also believe that these A.I. companies should be required by law to design their chatbots with the good of consumers in mind? The answer is once again yes.

We asked our sample, “Do you agree or disagree that AI companies should have a legal duty to act in their users’ best interests, similar to how doctors and lawyers owe duties to their patients and clients?” (Such a policy is otherwise known as a “duty of loyalty.”)

Overall, 90% of Americans agree that A.I. companies should have a legal duty of loyalty to their users. Support for a duty of loyalty is also strongly bipartisan: 93% of Harris voters and 92% of Trump voters support A.I. companies having a duty of loyalty to their users.

Agreement is also universally strong among income groups, with the lowest level of support being from low-income Americans, who still support the duty of loyalty at 88%.

When examining how different age groups viewed this policy, we found that support is strongest among older Americans, but adults aged 18-34 still support the measure at 88% (that is, by approximately 9 to 1).

In sum, Americans overwhelmingly agree that serious policy measures should be enforced to ensure that A.I. chatbots do not harm kids and that parents and lawmakers should have robust remedies to ensure good behavior and industry compliance. The support is so large for these provisions that it is practically universal. This survey gives us absolute confidence about where American voters stand on this issue. This is a mandate.

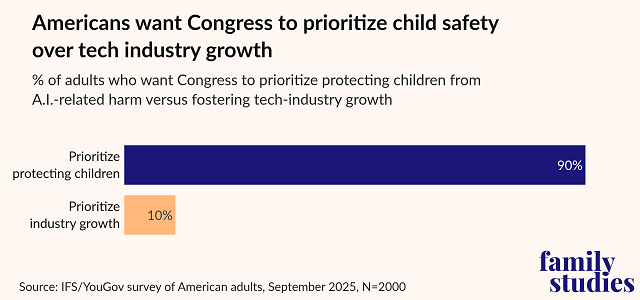

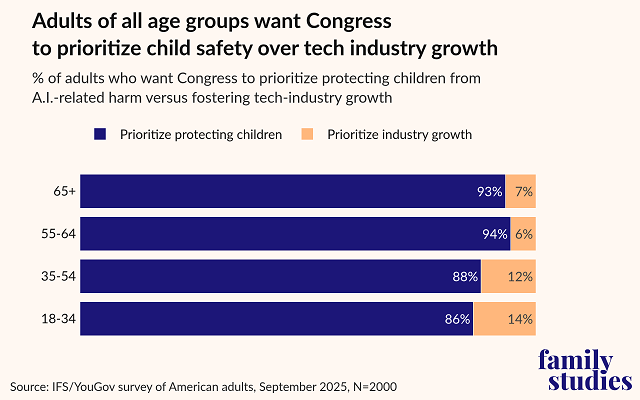

But what do Americans want Congress to prioritize—protecting children from predatory behaviors by A.I. chatbots, or protecting a vital industry from overzealous lawmakers and regulators?

In a randomized format, we asked voters which of the two policies Congress should put first. Should it prioritize “preventing states from passing their own AI laws that could burden tech companies and slow industry growth,” or should it prioritize “passing guardrails to protect children from AI-related harms such as addiction, sexual exploitation, and suicide”?

The preference of the American people was clear: 90% of Americans want Congress to prioritize establishing safeguards to protect children.

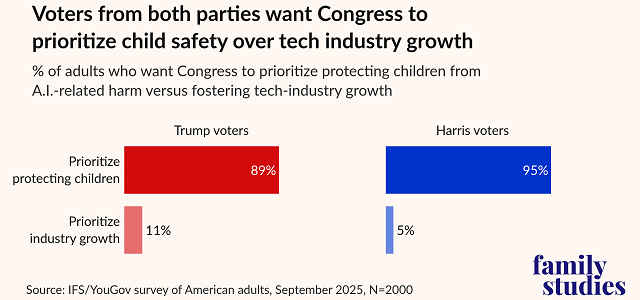

And, just as we saw above, all age groups, all income brackets, and both parties agree—by a wide degree—that the priority of Congress should be to protect children, over working to keep states from regulating A.I. companies to foster innovation.

Prioritizing guardrails is the bi-partisan preference by a staggering 89% of Trump supporters and 95% of Harris voters.

Why do Congress and the American people have such different priorities on A.I.? We don't know for sure, but it is worth recognizing that this divergence is extremely large. American voters clearly do not want Congress blocking states—and we can also assume—the federal government from protecting citizens from generative A.I.

But why do Americans want Congress to prioritize child safety over fostering A.I. innovation? We think the answer here is more obvious. Most Americans welcome innovation, but not at the expense of the well-being and flourishing of our loved ones, especially children. Though it's not a household name, an overwhelming majority of Americans clearly do not want to experience another Section 230, a legal provision that has shielded social media companies from liability. Such industry carveouts and protections have permitted Big Tech to lay an entire generation of young Americans to waste. And now, given the extraordinary power of generative A.I., the stakes are far greater.

There is a clear choice to make. The American people want safeguards. Will Congress listen?

Michael Toscano is a Senior Fellow and Director of the Family First Technology Initiative at the Institute for Family Studies. Ken Burchfiel is a Research Fellow at the Institute for Family Studies.

*Photo credit: Shutterstock

Endnotes

1. See the appendix below for the original wording of our A.I.-related survey questions.

2. Our original survey results contained birth-year data, rather than age data. We classified respondents in the 18-34 range if they were born on or after 1991; in the 35-54 range if they were born between 1971 and 1990; in the 55-64 range if they were born between 1961 and 1970; and in the 65+ range otherwise. This classification approach may have resulted in incorrect age range assignments for a small group of respondents.

3. For this analysis, respondents with a family income below $50,000 were classified as low-income; those with family incomes greater than $50,000, but less than $120,000 were classified as middle-income; and those with incomes greater than or equal to $120,000 were classified as high-income.

APPENDIX

The full text of each of our four A.I.-related survey questions is as follows:

1. Do you agree or disagree that technology companies should be PROHIBITED from deploying AI chatbots that engage in sexual conversations with minors? (Respondents could choose "Strongly agree," "Somewhat agree," "Somewhat disagree," or "Strongly disagree" for this question as well as for questions 3 and 4.)

2. In your opinion, which of the following should be a higher priority for Congress right now? [The order of the following two responses was randomly chosen for each respondent.]

a. Preventing states from passing their own AI laws that could burden tech companies and slow industry growth

b. Passing guardrails to protect children from AI-related harms such as addiction, sexual exploitation, and suicide

3. Do you agree or disagree that families should have the right to sue an AI company if its products contributed to harms such as suicide, sexual exploitation, psychosis, or addiction in their child?

4. Do you agree or disagree that AI companies should have a legal duty to act in their users’ best interests, similar to how doctors and lawyers owe duties to their patients and clients?

Among our 2,000 respondents, three answered "No opinion" to our question about sexual chatbots and kids; two answered "I don't know" to our question about what Congress's priority should be; two answered "No opinion" to our question about whether individuals should be able to sue A.I. companies; and four answered "No opinion" to our question about whether A.I. companies should have a duty to act in users' best interests. These responses were excluded from our analyses.