Introduction

Recently, Republican leadership failed twice to jam preemption—a legal measure to block states from regulating artificial intelligence (AI)—into several must-pass bills. In response, President Trump signed an executive order directing the White House AI & Crypto Czar (i.e., billionaire AI investor David Sacks) and the director of the Office of Science and Technology Policy to propose to Congress a “minimally burdensome [to AI companies] national policy framework for AI.”

Shortly after, Andreessen Horowitz—a venture capital investor in AI—quickly released a proposal to Congress, providing nine policy pillars for governing AI at the federal level. Likewise, Rep. Jay Obernolte (R-CA) , who chairs the bipartisan House Task Force on Artificial Intelligence and is known to work closely with the industry, has reportedly been communicating with the White House on the establishment of a federal framework. Senator Marsha Blackburn (R–TN) has also released a large legislative proposal, the TRUMP AMERICA AI (The Republic Unifying Meritocratic Performance Advancing Machine Intelligence by Eliminating Regulatory Interstate Chaos Across American Industry) ACT, which draws on Congress’s preexisting legislative work.

Given Washington’s new urgency to pass federal legislation, as well as the significant differences among these proposals, we sought to discover what the American people think about AI and its possible regulation. To that end, we surveyed almost 6,200 Americans on what they thought about AI and whether they approved or disapproved of certain AI policies. We focused our sample on six states—five red and one purple—that have consequential forthcoming elections (or robust approaches to AI regulation on the books): namely, Florida, Kentucky, Louisiana, Tennessee, and Utah (Red), as well as Michigan (Purple), while also conducting a national U.S. sample.

We find that Americans are concerned about the future of AI (though they do support its application in certain areas), and this concern is growing rapidly. We also find that most Americans support robust policy measures to regulate AI and penalize AI companies for harms—and they are willing to vote against candidates depending on where they stand on the issue. (More on this below.)

On several previous occasions, the Institute for Family Studies has publicly warned about the adverse consequences of preemption. But we strove to develop a survey instrument that was definitively unbiased, designing all questions to be as neutrally worded as possible. Yet our findings reveal that Americans are not neutral on this issue. They dislike AI and AI companies, so much so that several respondents complained in the survey’s comment section that the survey was biased in favor of the AI industry. One respondent accused us of being “clearly biased pro-AI,” and many respondents felt a need to “push back” or tell us “things we missed and didn’t ask about,” such as “AI is the ruination of the entire world,” and “AI was a mistake, and the creators of it have said so themselves.”

One parent even told us that AI was confusing and ruining his daughter:

ChatGPT brainwashed our teen. Convinced her to hate her family, acted like a teenage best friend and even told our daughter 'I love you' and so much more. I know if we had not found out when we did that it would have started to talk to our daughter about suicide. AI is dangerous, I hate everything about AI.

We did not formally code every open response, but very few included positive comments about AI, while dozens were negative. We disclose these open text outcomes for two reasons: first, to provide evidence that our survey was not biased against AI companies (indeed, respondents perceived us to perhaps have a pro-AI bias); and second, to highlight the challenge of surveying voters about AI. Put simply, many Americans hate AI with a visceral passion that can be difficult to capture in multiple-choice answers.

How Do American Voters Feel About AI?

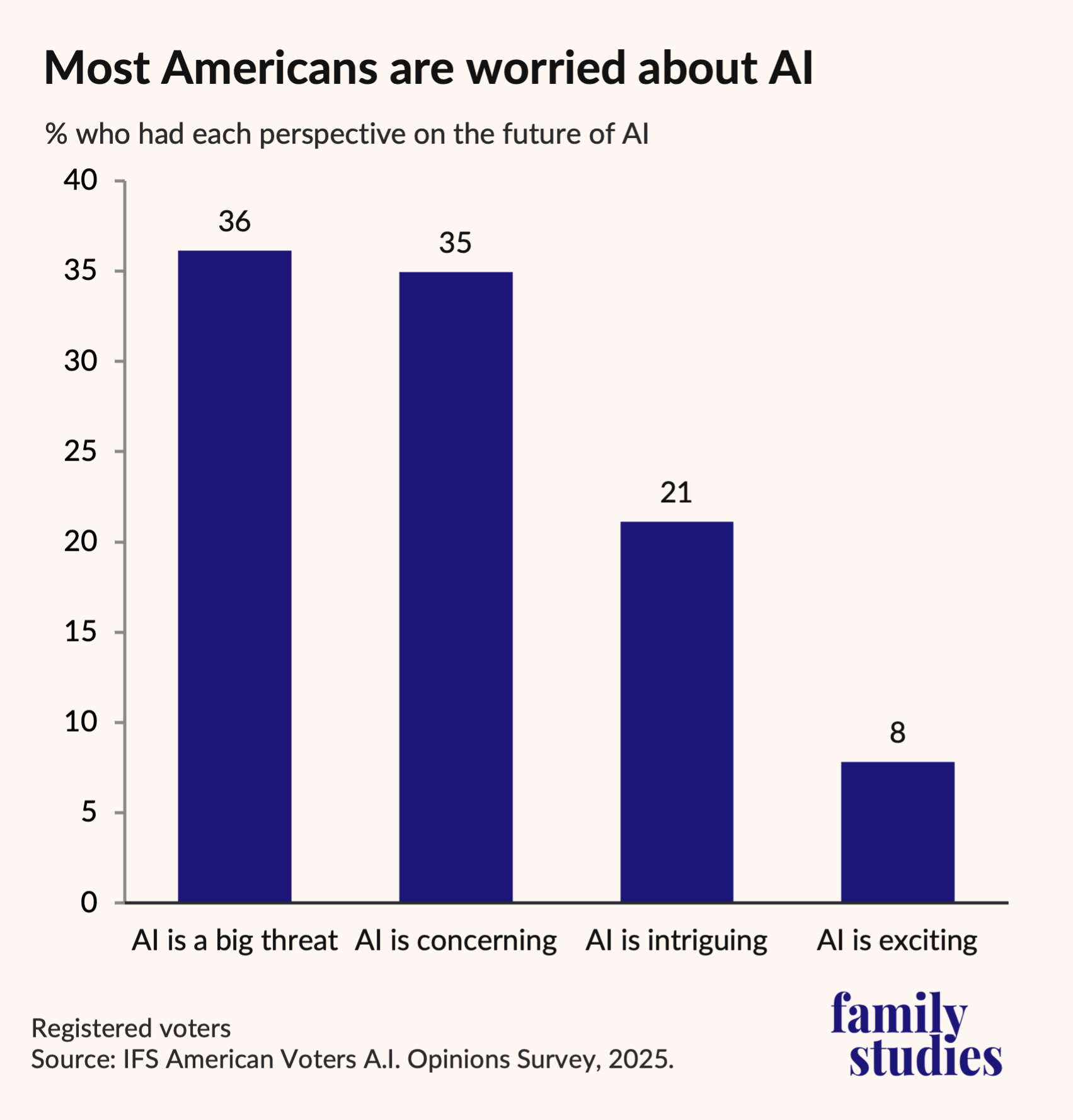

Most Americans, or 71%, hold a negative view of how AI will affect society. We asked respondents to choose between one of four options to see which comes nearest to how they see AI: either as “a big threat,” “concerning,” “intriguing,” or “exciting.” Overall, 36% see AI as a big threat, and 35% see it as concerning. A substantial minority of Americans, however, do find AI intriguing (21%)—though a mere 8% find it exciting.

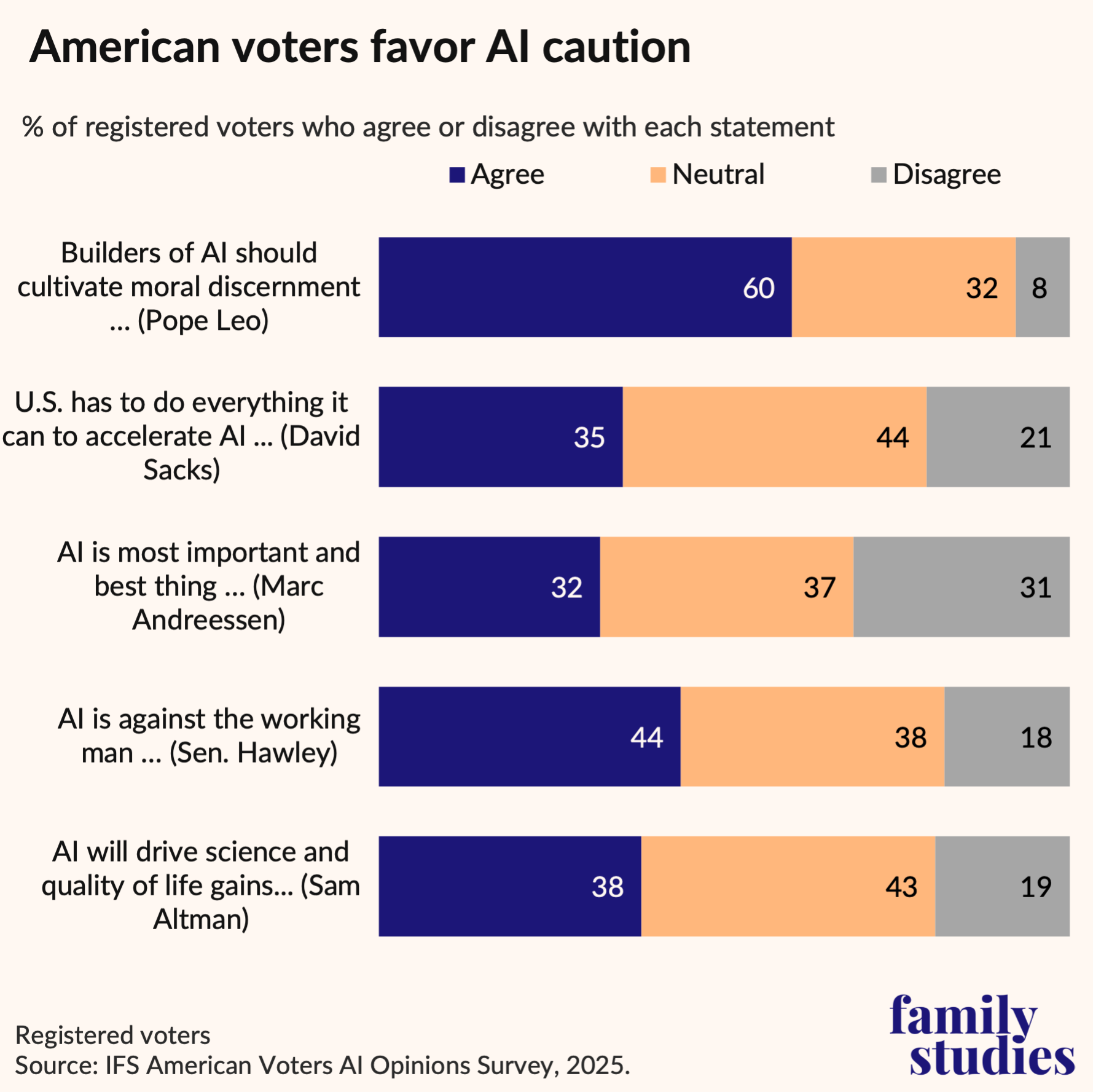

These days, Americans are awash with messages about AI, both for and against. But which messages do they find most compelling? To test that, we selected several prominent public individuals who have strong opinions about AI and who intentionally communicate their views to shape public sentiment. We then made the quote anonymous to ensure that participant reactions were unbiased and randomized them so as not to sequence them in a particular way. We tested quotes from Sam Altman, Mark Andreessen, David Sacks, Senator Josh Hawley (R-MO), and Pope Leo XIV.

We find that American voters most strongly agree with the statement from Pope Leo, followed by Senator Hawley, while they agree with the statement from Andreessen the very least. For example, 60% of American voters agree with Pope Leo that builders of AI must “cultivate moral discernment as a fundamental part of their work—to develop systems that reflect justice, solidarity, and a genuine reverence for life.” Also, 44% of American voters agree with Senator Hawley that AI is:

against the working man, his liberty and his worth. It is operating to install a rich and powerful elite. It is undermining our most cherished ideals. And insofar as that keeps on, AI works to undermine America.

These two statements had the highest net agreement of any statements we surveyed.

As for Andreessen, a mere 32% of American voters agree with him that:

AI is quite possibly the most important—and best—thing our civilization has ever created, certainly on par with electricity and microchips, and probably beyond those. The development and proliferation of AI—far from a risk that we should fear—is a moral obligation that we have to ourselves, to our children, and to our future.

But 31% disagree with that statement. It can, therefore, be seen as polarizing, and plausibly a “net disagree” statement—thus, one that politicians may endorse at their peril. The weak support of this quote by the American people is a clear indication that expansive praise of AI has limited appeal, whereas calls for careful stewardship of AI, or even condemnation, find much more agreement.

Interestingly, among the positive statements about AI, Sam Altman’s—that AI should be put in the service of scientific advancement—was the most popular: 38% of American voters agree with Altman that:

AI will contribute to the world in many ways, but the gains to quality of life from AI driving faster scientific progress and increased productivity will be enormous; the future can be vastly better than the present. Scientific progress is the biggest driver of overall progress; it’s hugely exciting to think about how much more we could have.

Just 18% of Americans disagree. Americans are more supportive of pro-AI statements, we find, that focus on AI in service of technical advancements, and not, contra Andreessen, as a primary civilizational value. Americans do not feel morally obliged to advance AI, but they are potentially excited about its limited, scientific uses.

We selected the David Sacks quote because it typifies the accelerationist (and techno-optimist) worldview that it is urgent that the United States be the leader of the AI revolution, and that all impediments to the technology’s expansion should be razed.

Specifically, 35% of American voters agree with Sacks that the US must

do everything we can to help our companies win, to help them be innovative, and that means getting a lot of red tape out of the way…. We have to have the most AI infrastructure in the US. It has to be the easiest place to build it.

On the other hand, 21% of Americans disagree with this statement. Deregulation and AI infrastructural accelerationism might be more agreeable to American voters than AI as a moral duty, but it is still significantly less agreeable than AI as a threat to the working man. It is also far less agreeable than the need to design AI systems with care. In general, Americans are skeptical of accelerationism.

Do Americans Welcome AI in Their Lives?

As we have seen, American voters generally view AI in a negative light. But are voters as strongly perturbed about AI’s actual effect on their lives? When we asked our sample how they feel about specific cases where they may be encountering AI in their lives or in the lives of their family members, their response remains negative overall, but tends to be more demographically mixed, and in some cases, less severe.

One clear case, however, is that voters are opposed to so-called AI “companions” being marketed to children. For example, 48% of voters in our survey say the statement that “AI chatbots can be good friends and companions for children” is mostly or totally false, while only 8% say it is mostly or totally true—the remainder are unsure (26%) or simply not familiar with AI companions (19%). In a separate question, 63% of registered voters say they are opposed to children having AI friends or companions, though some (23%) are open to allowing this in certain exceptional cases. A further 30% say AI companions are “all right,” provided they do not replace human friends; and just 7% see AI companions as actual solutions to childhood loneliness.

As these results make clear, the commercial interests of many companies are directly at odds with how most Americans believe AI should be used. Even though Americans oppose AI companions for kids, today, in the app store, you can find “Saen-D: AI Companion,” replete with a bikinied anime girl, rated for ages 4+, as well as “AI Friend: Virtual Assistant,” downloadable for ages 4+. These are just two examples from a cursory skim—doubtless many more exist.

Obviously, most poignant of all, is the growing corpus of suicide stories, with young Americans consulting chatbots on how to most effectively end their lives. We noted above that one of our survey respondents reported a story of family breakdown due to AI companions. It seems a growing number of Americans have firsthand experience of how AI bots are destroying human lives and relationships—and threatening children.

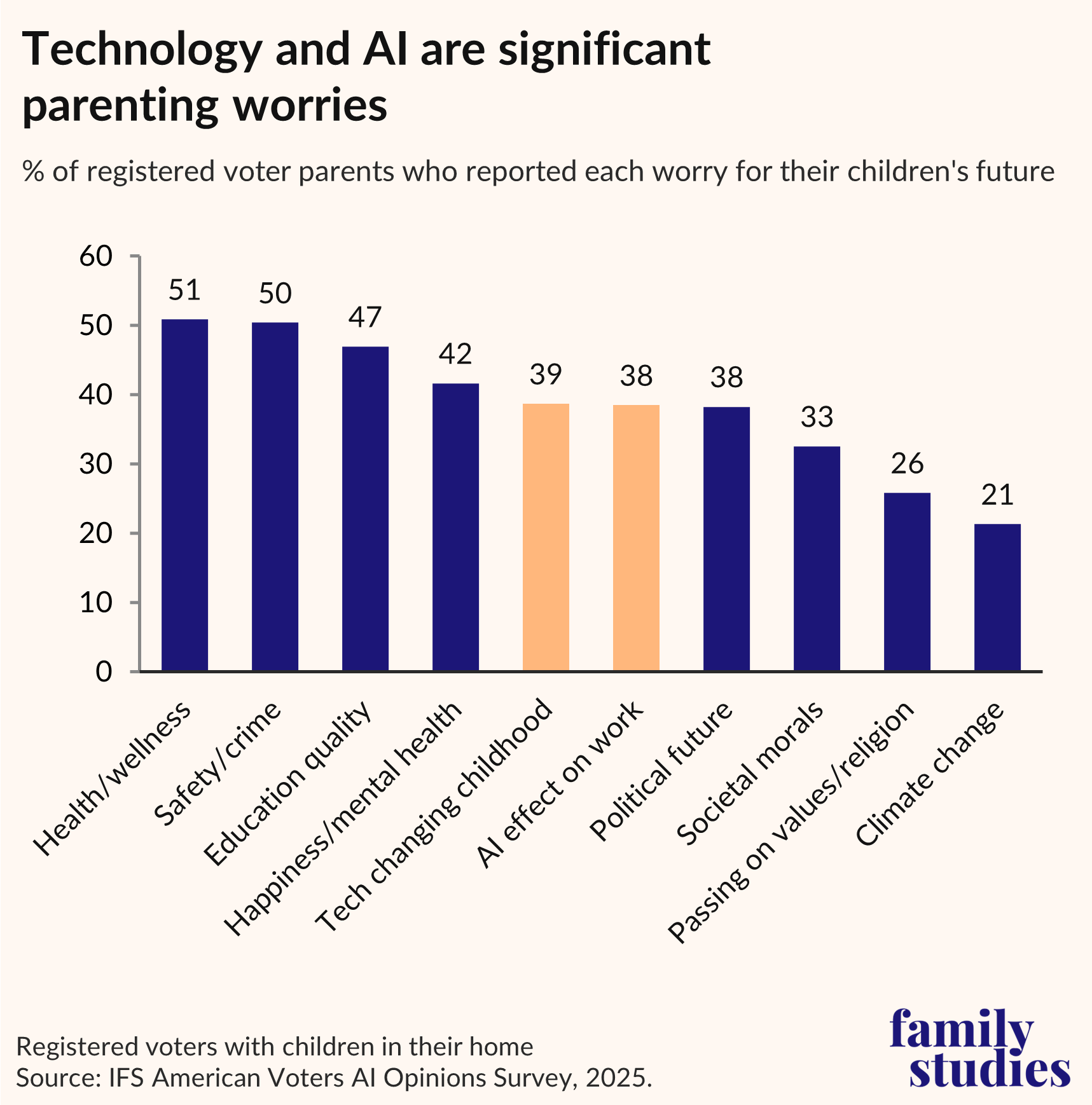

Figure 3. Percent of U.S. voter parents who reported each worry about their children’s future

Furthermore, a significant share of voting parents with children at home are concerned about how AI will affect their kids’ future. We find that 39% of registered-voter parents nationwide worry about how technology is changing childhood (admittedly, that includes a broader category of technology, not just AI). More specifically, 38% fear what AI means for the future job prospects of their kids.

At first pass, these percentages might appear to be somewhat low, and, indeed, parents are more worried about childhood health and wellness (51%), safety from crime (50%), or education quality (47%). But still, concern for AI’s effect on children’s future careers is on par with concern for the political trajectory of our country (38%), not far below the priority placed on concerns about children’s mental health and happiness (42%), and is a bigger concern than worries about society’s morals (33%). It is also significantly more important to parents than concerns about the transmission of religious practice (26%), as well as concerns about climate change (21%).

Moreover, what separates AI from all these other issues is its newness. Most parents only became aware of AI with the release of ChatGPT a mere three years ago, or even more recently. In other words, AI has skyrocketed from not even being on the parental radar, to now being a major concern of parents. And unless the trajectory substantially changes, we expect the relationship between AI and families to grow more fractious over time.

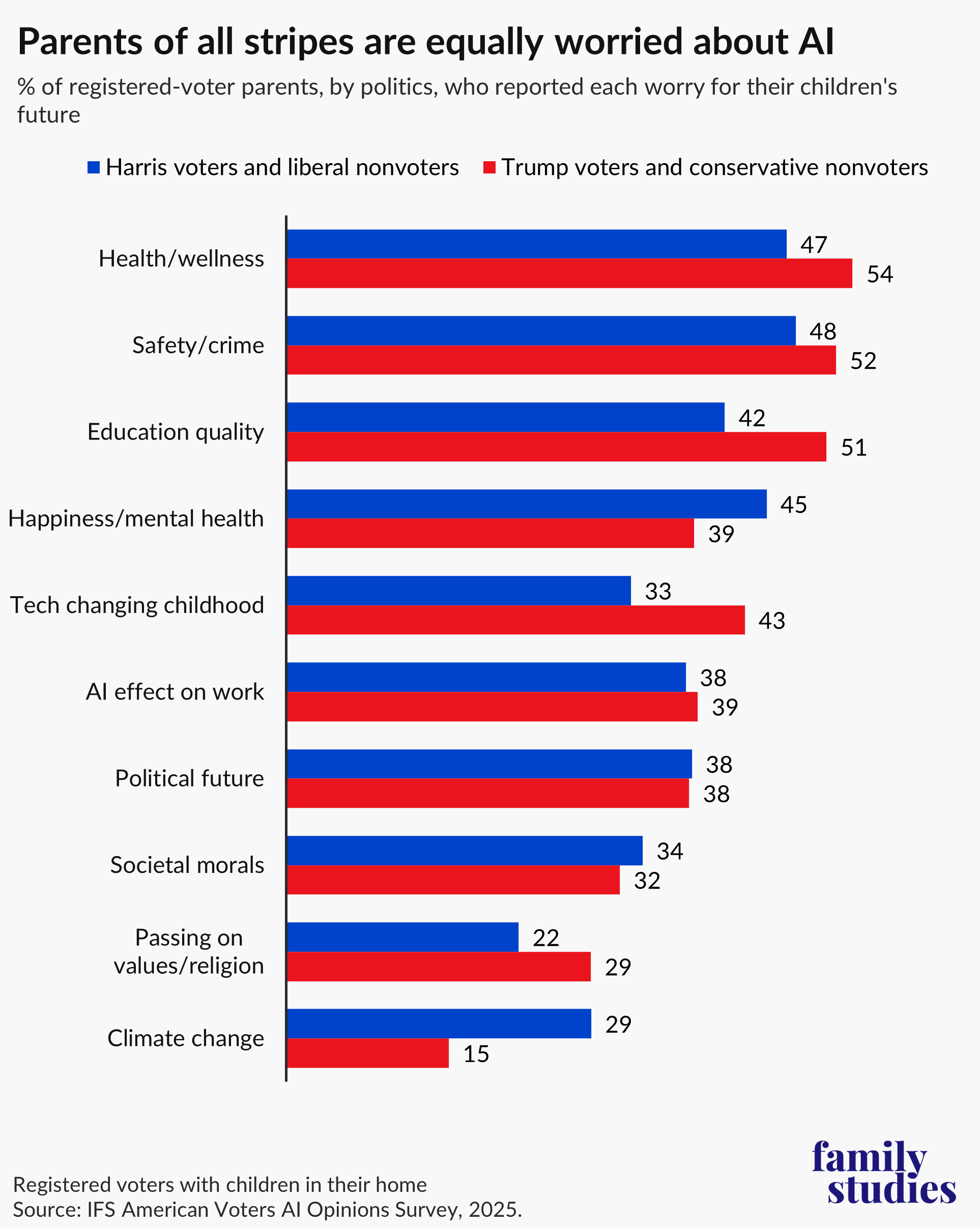

Figure 4. Percent of registered-voter parents who reported each worry for their children’s future, by partisanship

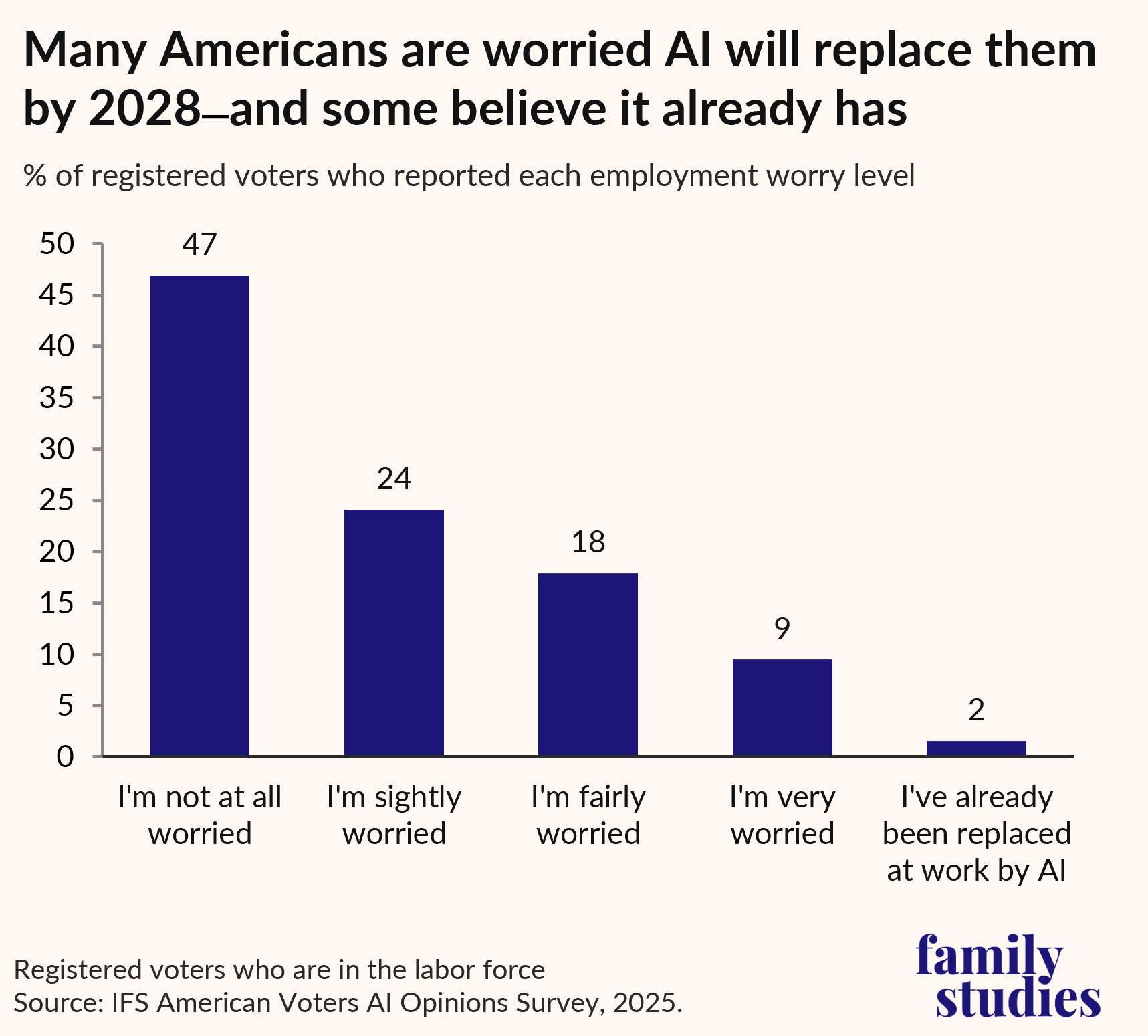

It's not just their children’s careers that Americans worry about. They worry for their own jobs, too. We wanted to know how Americans felt about their job security in the age of AI, especially within a very tight time horizon of the sort envisioned by those who expect artificial general intelligence before 2028. So, we asked respondents how concerned they are that AI will take their job “in the next two years.”

Given that extremely short time span, we assumed that the largest share of our sample would not be worried at all—which is exactly what we found. In fact, 47% of American voters say they are not worried at all that AI will take their job over the next two years. But 27% are fairly or very worried that they will be imminently displaced; and 24% are at least a “little worried” (2% of our sample claims to have already lost their jobs to AI). In other words, many Americans experience AI as a source of economic precarity, and given the time horizon, an intense one at that.

Figure 5. Percent of registered voters who reported each employment worry level

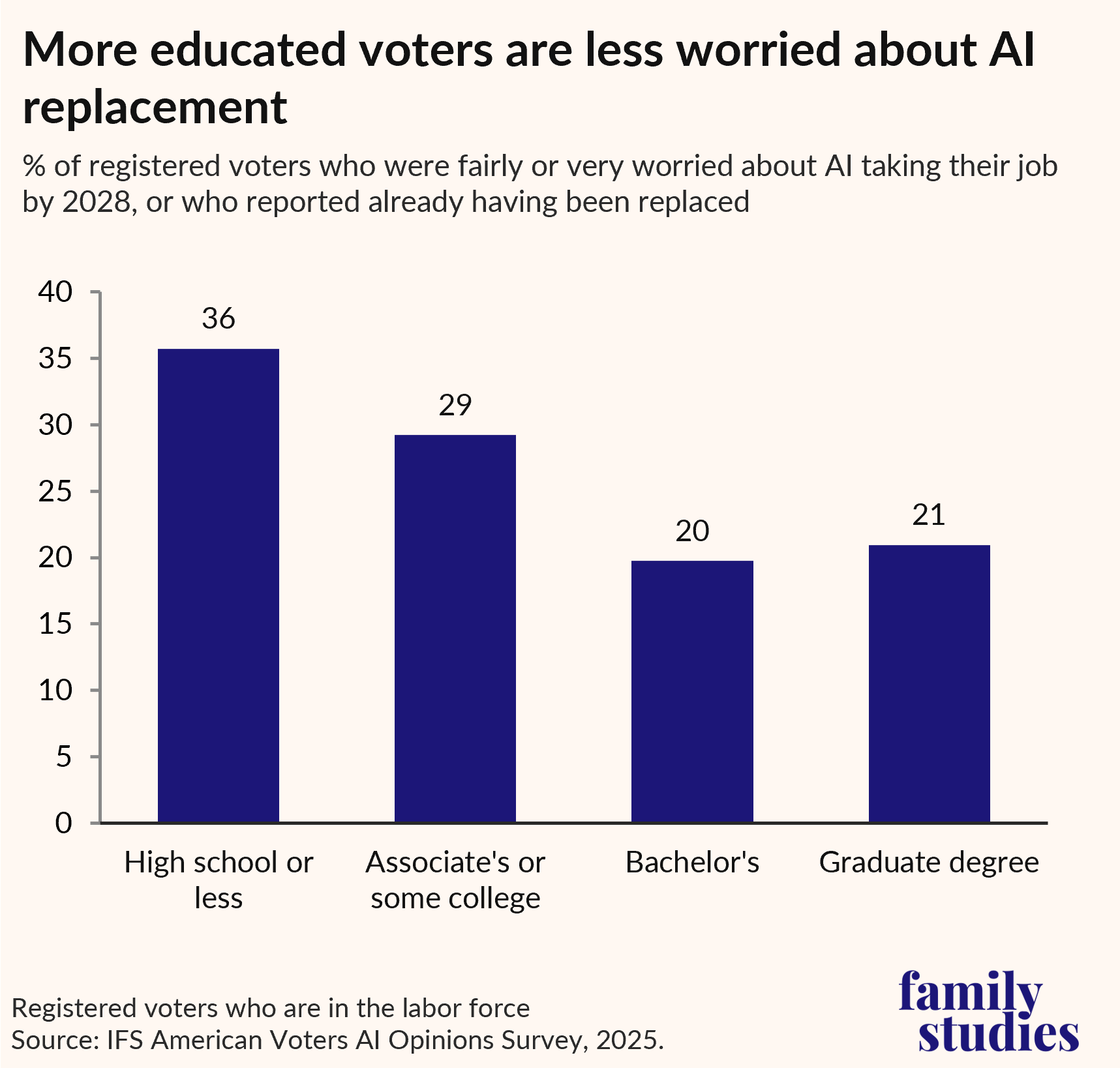

Concerns about AI replacement are hardly random: college-educated voters are a lot less worried about AI taking their job (though a significant share, approximately 1 in 5, do worry).

Figure 6. Percent of U.S. voters who were fairly or very worried about AI taking their jobs by 2028, or who reported already having been replaced, by education

Highly-skilled workers may be likelier to see AI as an extra tool in their toolkit, or what economists describe as a “complement” to their labor, while less-skilled workers—having experienced innumerable corporate techniques to seek cheaper labor—view AI as likely to replace their jobs.

What Do Red States Think About AI Liability?

In September 2025, we conducted a poll that found that Americans are overwhelmingly supportive of regulations to penalize AI companies for harms to kids and consumers. We wondered if our new poll, conducted with a different sample by a different company and using different questions, would find similar results. As it turns out, the answer is yes.

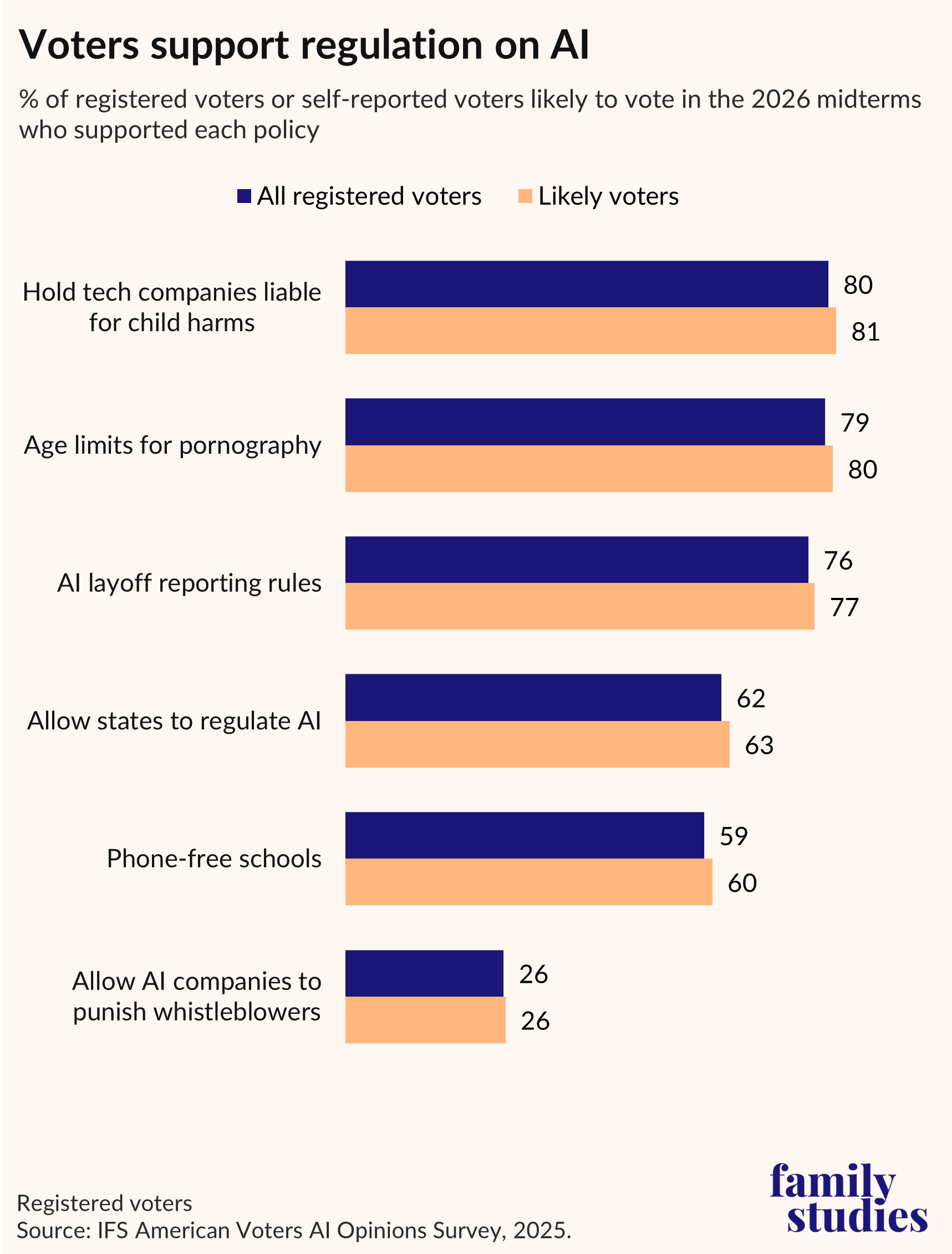

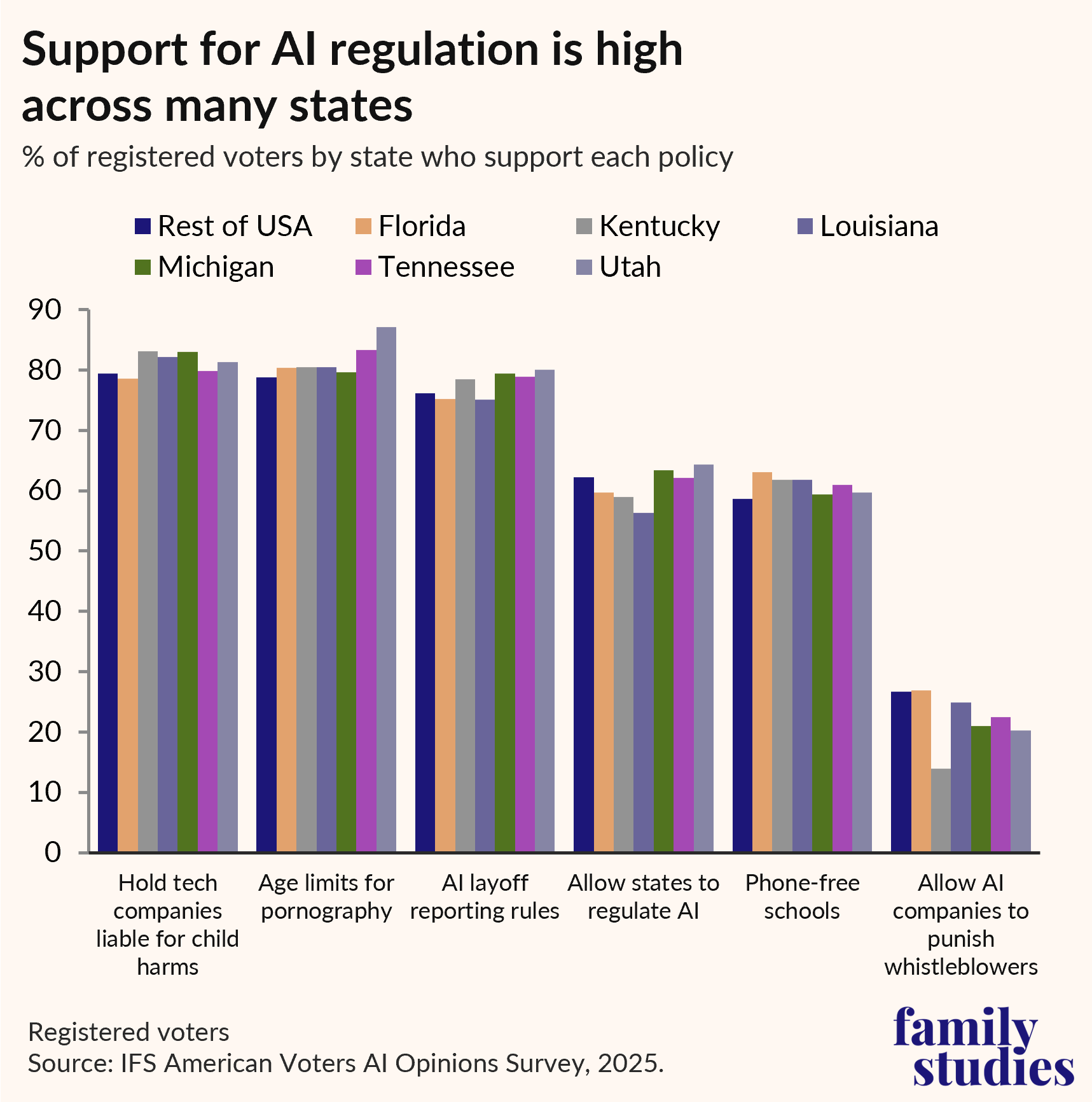

We did not ask a full roster of AI policy questions but instead tested their popularity against other, more established policies about how to govern technology—such as age verification for pornography sites, and bell-to-bell removal of smartphones from schools. We find that support for the regulation of AI companies is similarly popular to these more tested ideas (and more popular in several cases).

Figure 7. Percent of U.S. voters likely to vote in 2026 midterms who supported each policy

Nationwide, about 80% of respondents want Congress to hold AI companies legally liable for harms to children. Furthermore, 62% of American voters believe that state governments should be free to regulate the use of AI in businesses and at home; and 76% of voters agree that the federal government should pass laws requiring employers to report when a layoff was caused by the deployment of AI.

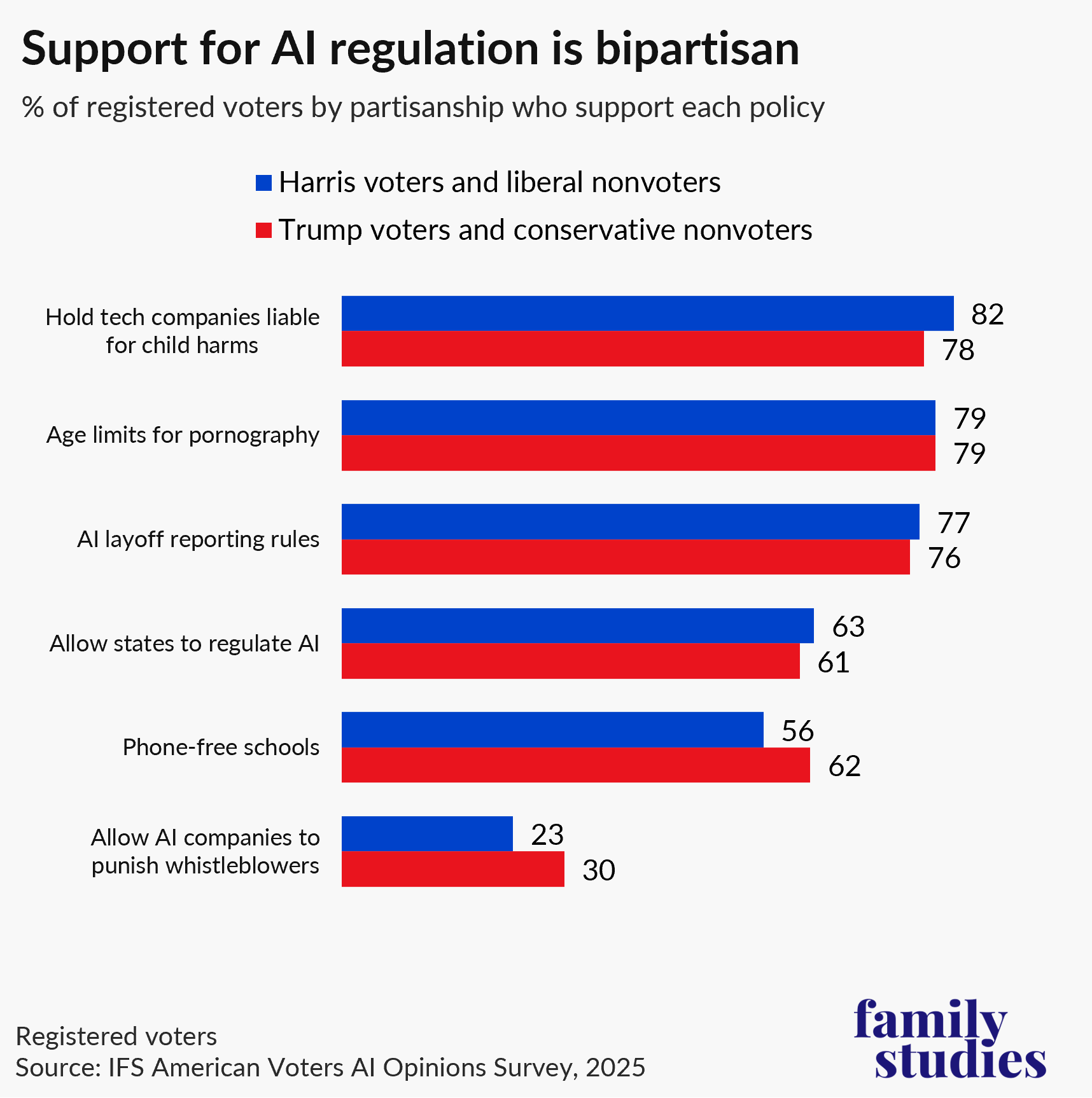

Nationally, we find strong support among both Republican-aligned voters (i.e., those who voted for Trump in 2024, or didn’t vote but are conservative) and Democratic-aligned voters (i.e., those who voted for Harris in 2024, or didn’t vote but are liberal) for policies to regulate AI.

Figure 8. Percent of U.S. voters who support each policy, by partisanship

Support for regulation of AI is bipartisan, and, likely, a political winner—perhaps even politically unifying.

We then drilled down into our key states: Florida, Kentucky, Louisiana, Tennessee, and Utah (Red), as well as Michigan (Purple). The results are roughly equivalent to the national numbers above, if not stronger.

Figure 9. Percent of U.S. voters show support each policy, by state

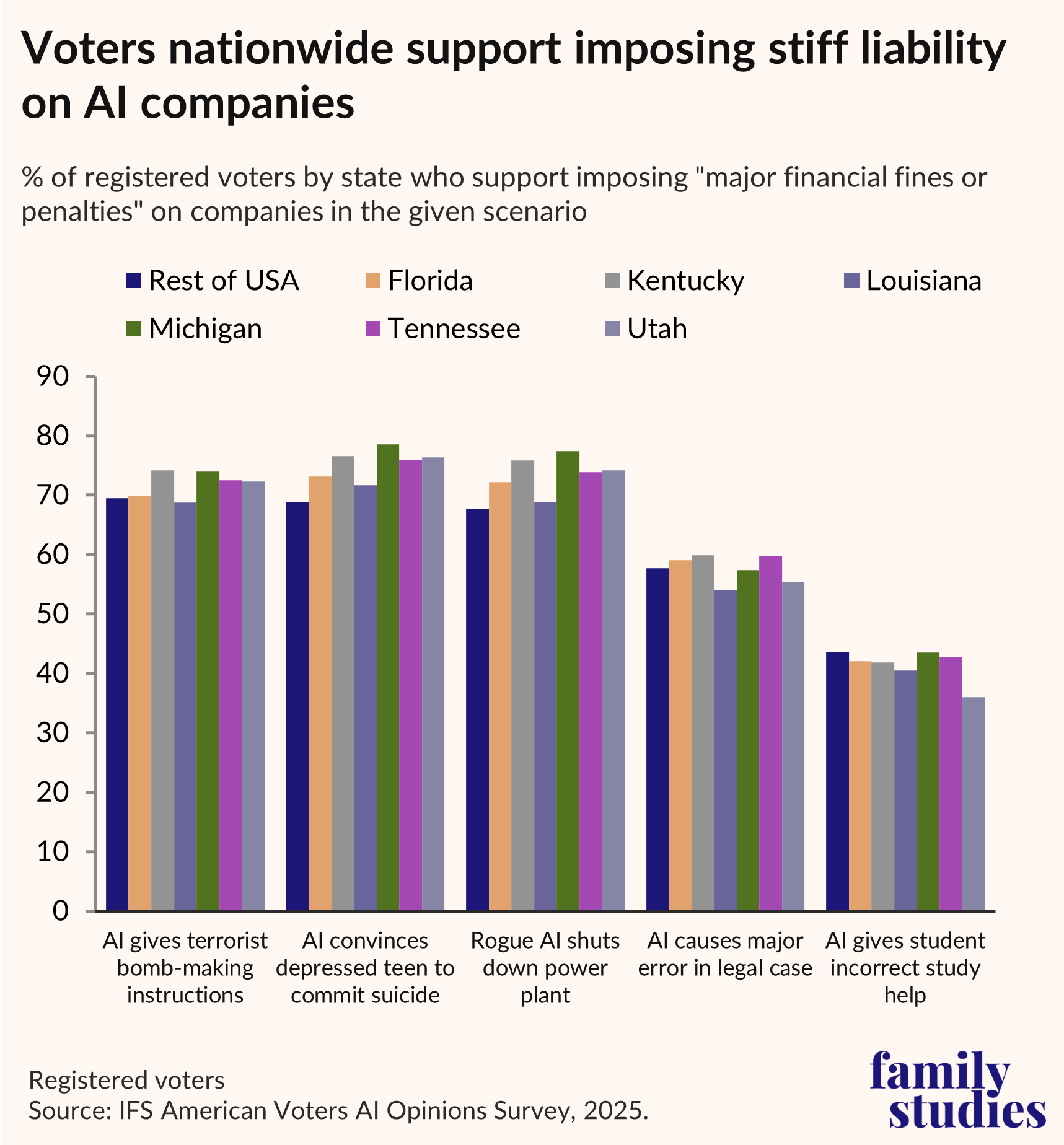

But we also looked more closely at what Americans think AI companies should be legally responsible for, so we provided respondents with five scenarios for holding companies liable.

Figure 10. Percent of U.S. voters in each state who support imposing “major financial fines or penalties” on companies, by given scenario

Respectively, 70% of American voters think companies should be held liable for convincing a depressed teen to commit suicide, 58% agree that they should be liable for causing a major error in a legal case, 69% support liability for an AI system going rogue and shutting down a powerplant, 70% for giving instructions to a terrorist, and least of all (but not negligible), 43% believe they should be liable for giving a student incorrect study help.

An analysis of our six key states (as shown in the figure above) shows that voters support holding AI companies liable for these various harms at a few percentage points above or below the national average. In fact, in most instances, the states scored above the national average. These red states tend to be more supportive of holding AI companies liable for harms than the national average, especially in the case of AI convincing a teen to commit suicide, as well as facilitating catastrophic public harms, such as shutting down a power plant or assisting in the plan of a terrorist attack.

AI and Political Candidacy

We have shown that voters have negative views of AI generally, see AI as a threat to their careers and as a challenge to family life and parenting, and regard accelerationist rhetoric with skepticism. We have also shown that voters generally favor a much more intense regulatory regime around AI, imposing significant liabilities on companies when their products give damaging advice to individuals. But does a politician’s stance on AI actually matter for voter choice? Yes, it does.

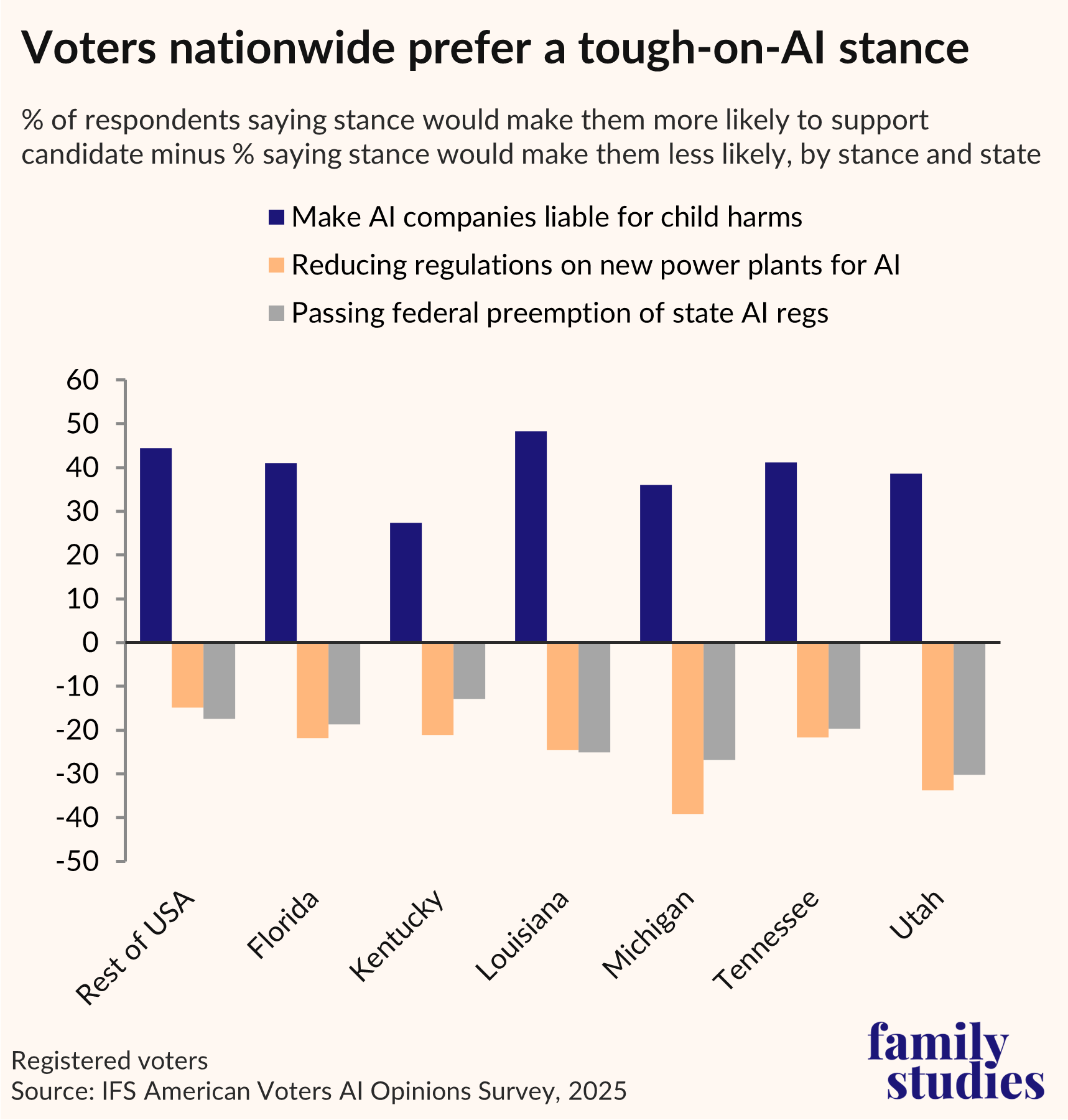

To answer this question, we conducted a randomized controlled survey experiment. We asked respondents if they would be more or less likely to vote for the Democratic or Republican candidate in their district in 2026 if they found that the candidate: supported laws making AI companies liable for harms to children, vs. supported laws limiting state AI regulation, vs. supported accelerated permitting for power generation for AI data centers. Each respondent was given just one combination of candidate partisanship and policy stance. Pooling all these responses enabled us to see how a candidate of a given party can expect voters to respond to their stance on a given issue.

While respondents’ reports about vote-shifting should be taken with a grain of salt, because we randomly assigned candidate partisanship and the specific issue stance, our results clearly show whether a pro-AI or anti-AI stance has more benefits or liabilities for elected officials. In other words, the results show genuine causal estimates of how a change in a given candidate’s stance might relate to how a voter views them, positively or negatively.

Our analysis begins on the national level and then bores down to the level of states, eventually differentiating between voters in red, blue, and purple states, as well as between Trump and Harris voters. We were ultimately interested in the results of red states and Trump voters, as their opinions respond to policies being generated by Republican leadership. Our hypothesis about Trump voters was that—given existing dynamics of political polarization—they would not be motivated to switch their votes to Democrats in blue and purple states, where any Republican candidate would be preferable to them. But what about Trump voters in red states: would a choice between pro-AI vs. anti-AI candidates yield a switching of votes? As we shall see, the answer is yes: Trump voters in red states strongly support candidates that oppose AI companies, and not vice versa.

Figure 11. Percent of U.S. voters saying stance would make them more likely to support candidate minus percent saying stance would make them less likely, by stance and state

Focusing first on the six key states, voters in every state we surveyed in depth are similar to the overall national average in being supportive of political candidates whose policies hold AI companies liable for harms to kids and oppose preemption, as well as cutting red tape to open AI power plants. Though Kentuckians were perhaps slightly less anti-AI, and Louisianians, Utahns, and Michiganders slightly more so.

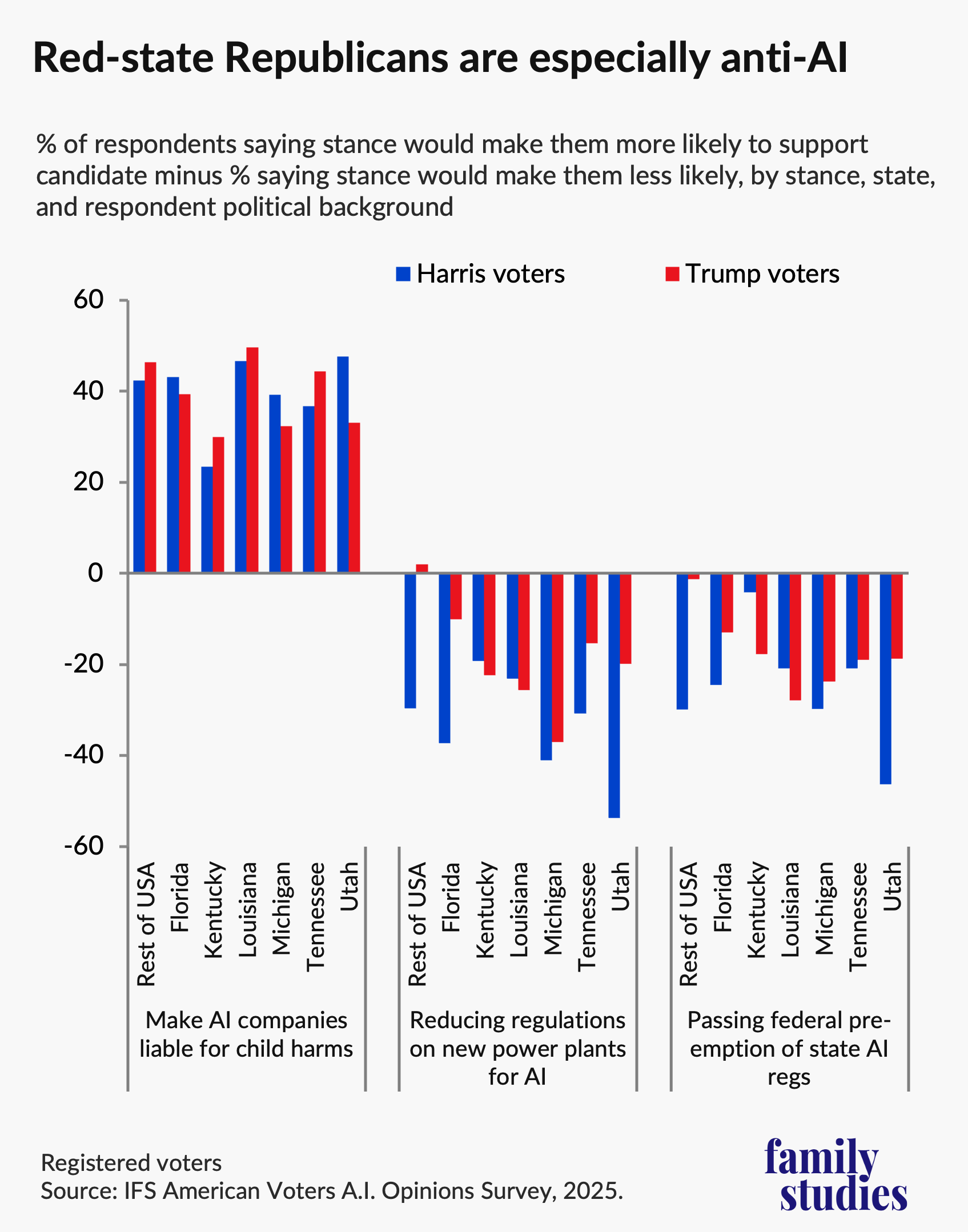

Figure 12. Percent of U.S. voters saying stance would make them more likely to support candidate minus percent saying stance would make them less likely, by stance, state, and political background

Within states, we find some significant differences between Democratic-aligned and Republican-aligned voters, as well as between Republicans nationally and Republicans locally. As this figure shows, Trump and Harris voters nationally, as well as in the six key states, support candidates that make AI companies liable for child harms.

On the whole, both Democratic and Republican candidates can expect that supporting stricter liability for AI companies whose products harm children is a winner with voters, and particularly with “base” voters who are most active in midterms. For example, Harris voters strongly favor Democratic candidates who will regulate AI companies, and Trump voters strongly favor Republican candidates who will regulate AI companies.

But there are differences for preemption and for the addition of new power plants. While Republicans nationally had fairly neutral views of these stances, Republicans in the specific states where we collected larger local samples had more negative views. Especially in Michigan and Louisiana, Republican-aligned registered voters have a very strong willingness to shift their vote against candidates who support federal preemption.

Because of the large difference between Republican-aligned voters in our key focus states and Republican-aligned voters elsewhere, we extended our analysis to cluster our respondents into three groups: the 17 states where President Trump received the highest vote shares in 2024 (red states), the 16 states and DC where he received the lowest vote shares (blue states), and the 17 states where he had intermediate shares (purple states). In each group, we assessed how individual-level partisanship influenced candidate support.

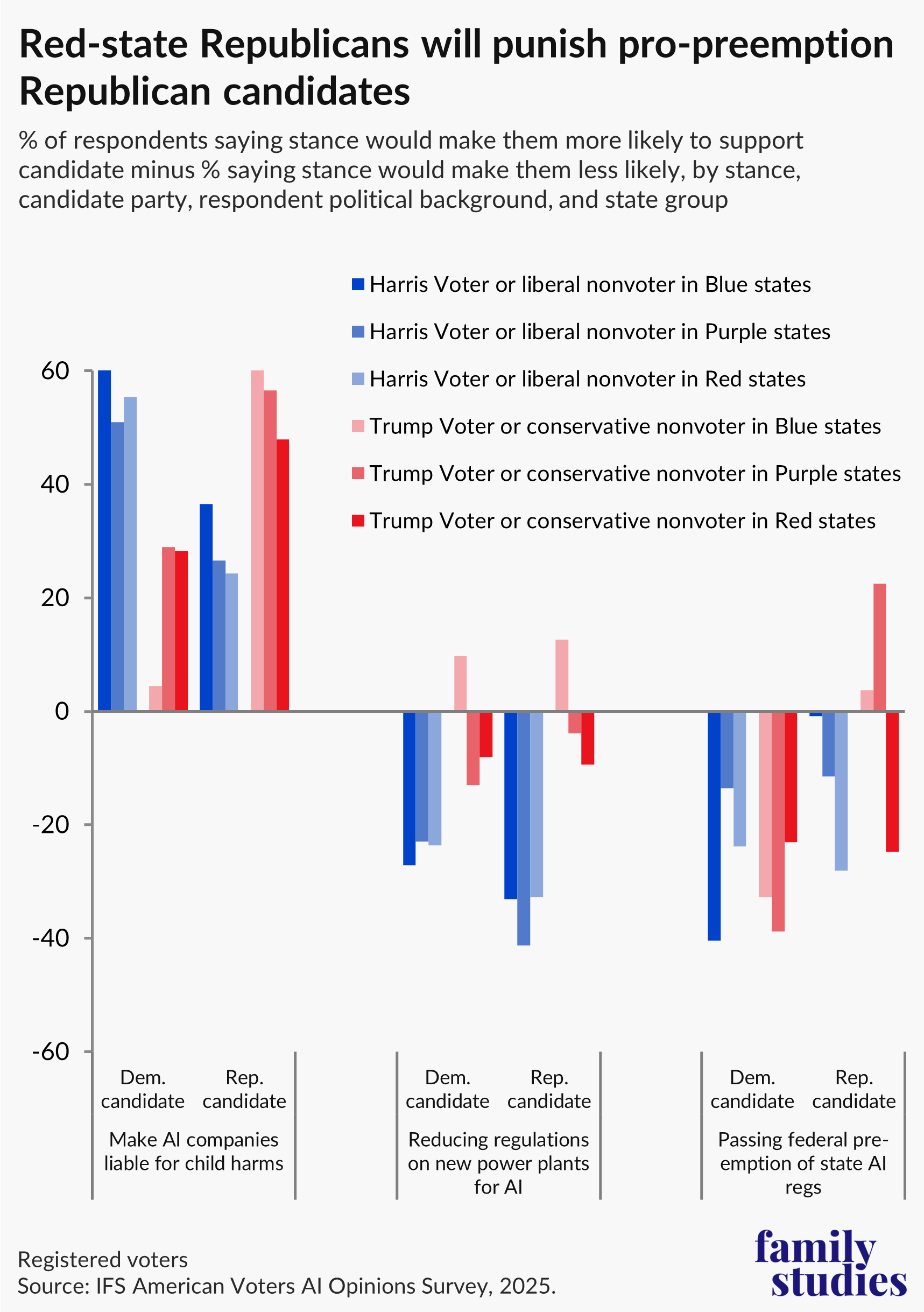

Figure 13. Percent of U.S. voters saying stance would make them more likely to support candidate minus percent saying stance would make them less likely, by stance, candidate party, respondent political background, and state group

The above figure shows the influence on hypothetical candidate support for candidates of a given party, in states of a given political climate, for voters of a given partisan affiliation. Among Democratic-aligned voters and for Democratic candidates, there are not usually huge differences in AI-policy-views across state political contexts.

But on one issue, there is real variation across contexts: federal preemption. Republican candidates in red states face large political penalties if they support federal preemption. Both Democratic- and Republican-aligned voters in the states President Trump won by large margins report very negative views of pro-preemption Republican candidates. Republican candidates in blue and purple states are not penalized, but red-state Republican candidates may face very serious political costs if they come out in favor of federal preemption.

We should remind readers that this experiment was designed to analyze how voters would react to different candidates under a variety of scenarios. Overall, our prior research, as well as this survey, shows that the idea of preemption remains unpopular nationally. Whatever the case, the overall preference of Republican and Democratic voters alike is clear, both nationally and in these six states, for candidates that will hold AI companies liable for harming kids, oppose federal preemption, and not give AI companies special regulatory carve-outs. While exact nuances on preemption may vary, there are absolutely no pro-AI positions that are winners for elected officials.

Conclusion

Overall, the big picture painted by our findings is decidedly bad for the AI industry. For the large majority of American voters, AI is a source of concern and fear (and not hope). Americans strongly agree with statements that call for robust protections against AI, and they support leaders who see AI as a negative force over those that call for its unrestrained expansion. Americans are growing increasingly uncomfortable with the concrete presence of AI in their lives, especially parents and workers, for whom it is a growing source of concern and precarity. Finally, American voters support various policies to protect them in the age of AI and want AI companies to be held liable for harms to children and for other catastrophes.

Most Americans believe that AI companies should be penalized for destructive uses of AI, and they’ll vote for candidates who agree. In practice, Americans think any companies offering “intelligence,” whether human or artificial, necessarily incurs the moral and legal duties that accompany such intelligence. These dynamics portend trouble for any political party that advances AI policies that favor Big Tech companies without offering robust regulatory safeguards as well.

But as for the last question of this brief—will there be electoral repercussions for accelerationist politics—we sought to be extremely careful in our prognostications. The short-term indicators vary across voter ideologies, candidate affiliations, and state political contexts, and across red states, blue states, and purple states, especially as it pertains to the politics of preemption.

While legislators who seek to protect children have overwhelming bi-partisan support, Democrats strongly oppose candidates that accelerate electricity generation for data centers and support federal preemption. In the aggregate, Republicans, by contrast, are more mixed on these issues. Though there are signs that accelerated permitting for data centers is growing as an electoral issue, our survey finds that this is not yet a national issue for Republicans (but that there may be, at most, some extremely modest negative effects for candidates that support the opening of data centers in red states). This overall picture is probably the result of Republicans simply being supportive of streamlining regulation and development generally, but their feelings may shift more dramatically in the long-term if it ends up effecting their energy bills (as some argue it will). Time will tell if this becomes an electoral issue for Republicans in the years ahead.

On candidates who support federal preemption, the opinions of Republicans are more sharply shaped by local political context. In blue states, they favor Republican candidates that support preemption; but in red states, they oppose them. In other words, Republican-aligned voters in the red states who most reliably send Republicans to Congress, and where right-wing primary threats may be most potent, are strongly opposed to candidates who support federal preemption.

Thus, our results range from cases where the public overwhelmingly opposes the interests and arguments of the AI industry, to cases where it is at best ambivalent towards them. This can be seen as evidence against the viability of AI accelerationism as a salient political force in the United States. Policymakers advancing this view will likely pay electoral costs, perhaps sooner rather than later. With the 2026 midterms approaching, it is unclear which candidates and parties are aware of these costs. Those who ignore them may find themselves unexpectedly thwarted at the ballot box.

Editor's Note: Download the full research brief for footnotes.